Andy J, post: 431836, member: 44 wrote: Nice work! do you do your traverses solo??

Yes, that was just me and some prism pole tripods. That and 8-minute vials on the prism poles did the trick.

You mentioned doing this as efficiently as possible. Is it possible that hitting all the control points 2 - 24 with the PPK GPS is overly redundant? Would your results be sufficiently good if you had only observed say 1, 5, 10, 19, & 24? How long did you spend observing each point?

Peter Lothian - MA ME, post: 431844, member: 4512 wrote: You mentioned doing this as efficiently as possible. Is it possible that hitting all the control points 2 - 24 with the PPK GPS is overly redundant? Would your results be sufficiently good if you had only observed say 1, 5, 10, 19, & 24? How long did you spend observing each point?

The observation times were five minutes on each point, which didn't seem like much of an investment of time to me. That particular series of control points has the feature of mixing legs of different lengths, the short legs making weak points in the conventional connections which the GPS vectors strengthened.

I suppose that I could demonstrate this, but I think its fairly straight-forward that as the spacing of the GPS-derived positions gets wider, the quality of the combination of conventional and GPS drops off significantly in a way that does not happen with a tighter spacing of GPS-derived positions. That is, if you have GPS-derived positions on 2, 3 and 4 that are directly connected by conventional angles, distances and elevation differences with an uncertainty on the order of about 2mm, the adjusted results of 2, 3, and 4 approach what three occupations of all would yield. I may work the numbers on this example just to demonstrate the drop in quality with a sparser set of GPS vectors.

Peter Lothian - MA ME, post: 431844, member: 4512 wrote: You mentioned doing this as efficiently as possible. Is it possible that hitting all the control points 2 - 24 with the PPK GPS is overly redundant? Would your results be sufficiently good if you had only observed say 1, 5, 10, 19, & 24? How long did you spend observing each point?

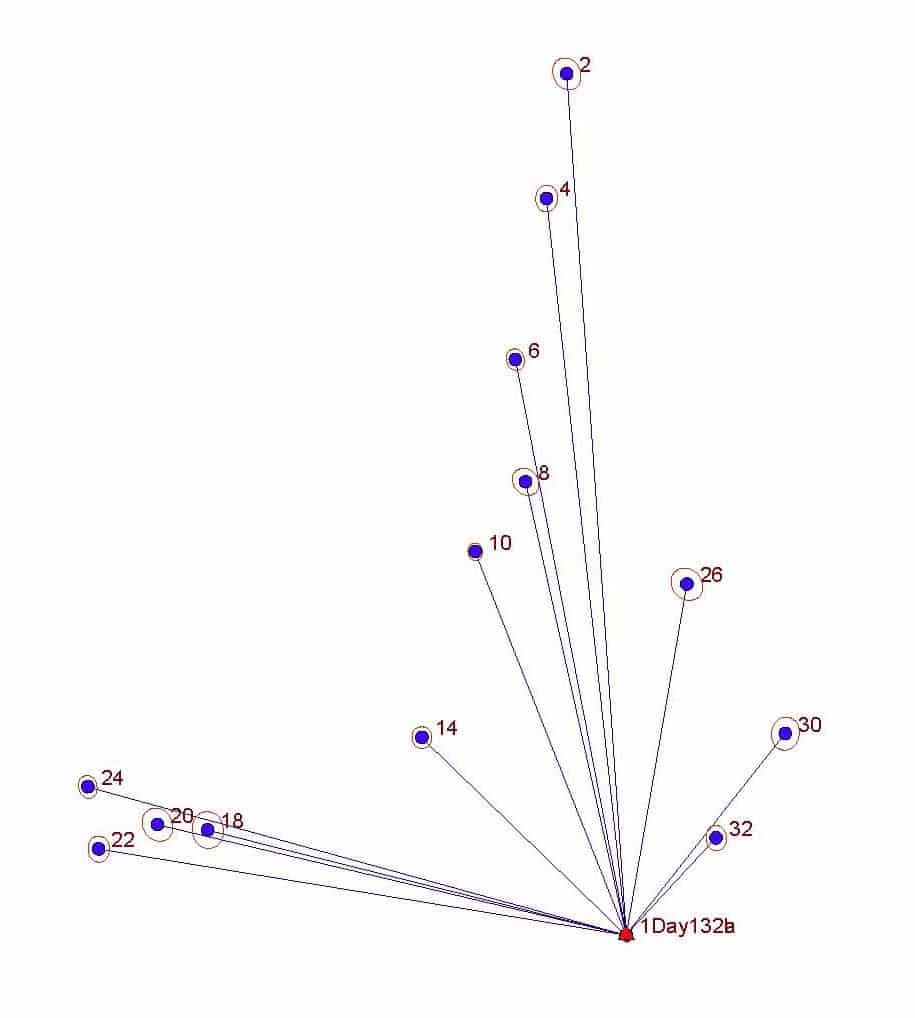

Here's the first answer. This set of standard errors of control points is what the network would yield if only roughly every other one had a GPS vector to it. There is an increase in uncertainty, but it isn't much. These are the sparser set of GPS vectors used in the adjustment of the same conventional traverse through the entire set of control points:

And the resulting uncertainties of the control points to compare to those listed in my post above:

[PRE]

Error Propagation

=================

Station Coordinate Standard Deviations (FeetUS)

Station N E Elev

1 0.010 0.007 0.024

2 0.013 0.015 0.031

3 0.012 0.013 0.031

4 0.012 0.011 0.030

5 0.012 0.010 0.030

6 0.012 0.010 0.030

7 0.012 0.009 0.030

8 0.011 0.009 0.030

9 0.011 0.009 0.030

10 0.011 0.009 0.030

11 0.012 0.009 0.030

12 0.012 0.010 0.030

13 0.012 0.010 0.030

14 0.012 0.010 0.030

15 0.014 0.011 0.030

16 0.014 0.011 0.030

17 0.014 0.010 0.030

18 0.015 0.010 0.030

19 0.014 0.010 0.030

20 0.014 0.010 0.030

21 0.014 0.010 0.030

22 0.014 0.010 0.030

23 0.015 0.010 0.031

24 0.014 0.011 0.031

[/PRE]

I think it would be fair to say that locating every other control point is a relatively efficient scheme since the effort is roughly half and the increase in uncertainty is only about 10% or less.

[SARCASM]I used to use old receivers like that..... until my dad got a job.....[/SARCASM] - ( actually, thanks for the info- Definitely something to consider in our networks here)

I have just began mixing gps with convential methods. I have never used LSA, only compass rule. I really like the idea of getting more out of an adjustment program while being able to mix grid and ground distances. Where does one learn to begin using this? Just jump in head first? Surv net or star net? I've been thinking about going through some tutorials.

JMH4825, post: 432180, member: 10876 wrote: I have just began mixing gps with convential methods. I have never used LSA, only compass rule. I really like the idea of getting more out of an adjustment program while being able to mix grid and ground distances. Where does one learn to begin using this? Just jump in head first? Surv net or star net? I've been thinking about going through some tutorials.

I use Star*Net. The advantage to using LSA software that allows you to import GPS vectors from a number of different manufacturers' formats as well as conventional observations in various standard DC formats is that you aren't tied to any particular manufacturer's format. The Star*Net input format is plain ascii text that can be edited and that, with some annotations and notes makes a very good permanent record of the raw data.

What I particularly like about Star*Net is its simplicity and backwards compatibility. Disclaimer: I'm still running Star*Net Pro V.6 with the separate DC conversion program that translates conventional data in SDR format to Star*Net input format.

Here's a sample of part of the conventional input file:

[PRE]

# Job ID: 17-881

.Units FeetUS

.Units DMS

.Order FromAtTo

.Sep -

.3D

.DELTA OFF

# At Spike 11

DV 11-10 241.8512 87-24-28.75 5.285/4.945 'SPIKE.WASHER

M 10-11-434 0-34-44.00 141.2250 86-48-36.00 5.285/4.945 'SPIKE.BC.CL.PVMT

M 10-11-435 1-48-27.50 119.2900 86-47-34.00 5.285/4.945 'SPIKE.MP.CL.PVMT

M 10-11-436 2-50-19.50 99.9550 86-42-20.50 5.285/4.945 'SPIKE.EC.CL.PVMT

M 10-11-437 10-38-56.50 36.2050 87-42-19.50 5.285/4.945 'SPIKE.BC.CL.PVMT

M 10-11-438 94-27-54.00 19.4050 95-20-32.50 5.285/4.945 'SPIKE.MP.CL.PVMT

M 10-11-439 123-42-18.00 65.3550 94-45-29.50 5.285/4.945 'SPIKE.EC.CL.PVMT

M 10-11-440 123-25-13.50 131.7700 94-12-26.00 5.285/4.945 'SPIKE.AP.CL.PVMT

#DV 11-10 241.8500 87-24-29.75 5.285/4.945 'SPIKE.WASHER

M 10-11-12 122-09-47.50 299.9000 92-34-28.75 5.285/4.945 'SPIKE.WASHER

# At Spike 12

DV 12-11 299.8663 87-33-49.75 5.325/4.945 'SPIKE.WASHER

M 11-12-441 347-32-29.00 20.7300 91-16-12.50 5.325/4.945 'SPIKE.AP.CL.RD

M 11-12-442 268-36-11.50 5.3625 98-56-10.50 5.325/4.945 'SPIKE.AP.CL.RD

M 11-12-443 202-52-28.00 16.4500 93-36-50.00 5.325/4.945 'SPIKE.AP.CL.RD

M 11-12-444 236-12-26.50 26.0675 94-56-49.00 5.325/4.945 'SPIKE.AP.CL.RD

#DV 12-11 299.8663 87-33-50.50 5.325/4.945 'SPIKE.WASHER

M 11-12-13 256-15-38.00 97.4750 90-10-59.00 5.325/4.945 'SPIKE.WASHER

# At Spike 13

DV 13-12 97.4850 90-24-27.00 5.565/4.945 'SPIKE.WASHER

M 12-13-445 0-53-26.50 44.5975 95-37-02.00 5.565/4.945 'SPIKE.AP.CL.RD

M 12-13-446 233-22-43.50 6.4350 91-55-36.00 5.565/4.945 'SPIKE.AP.CL.RD

M 12-13-447 198-16-18.00 27.6400 85-02-30.50 5.565/4.945 'SPIKE.MP.CL.RD

M 12-13-448 199-15-23.50 47.6950 84-48-05.00 5.565/4.945 'SPIKE.EC.CL.RD

M 12-13-449 202-44-39.50 85.6450 85-56-37.00 5.565/4.945 'SPIKE.BC.CL.RD

M 12-13-450 202-29-33.50 98.4750 86-14-02.00 5.565/4.945 'SPIKE.MP.CL.RD

M 12-13-451 201-41-21.50 110.6750 86-28-46.00 5.565/4.945 'SPIKE.EC.CL.RD

#DV 13-12 97.4850 90-24-24.25 5.565/4.945 'SPIKE.WASHER

M 12-13-14 204-02-52.00 112.8100 86-29-43.00 5.565/4.945 'SPIKE.WASHER

[/PRE]

The "#" character is for comment lines. The "At Spike 11" comments were added by me just to improve legibility. Likewise, I turned some of the lines (the "DV" lines) into comment lines by adding the "#" just because it was better to remove duplicate measurements from the same instrument setup to the same prism setup than to leave them in as if they were truly independent remeasurements.

As for learning Star*Net, one of its great strengths has been the excellent manual that was supplied with the software.

Rankin_File, post: 432169, member: 101 wrote: actually, thanks for the info- Definitely something to consider in our networks here)

I'll post an example of how the problem of designing an efficient survey, i.e. least uncertainty for least effort, can be tackled using Star*Net's pre-analysis features. On a large project, it would definitely be a feature worth using. Obviously, field work will present variations from the ideal anything, but having a ballpark idea of what should comfortably meet spec for the most moderate effort is usually a good place to start.

JMH4825, post: 432180, member: 10876 wrote: I have just began mixing gps with convential methods. I have never used LSA, only compass rule. I really like the idea of getting more out of an adjustment program while being able to mix grid and ground distances. Where does one learn to begin using this? Just jump in head first? Surv net or star net? I've been thinking about going through some tutorials.

Like Kent I'm a big StarNet proponent. But if you have SurvNet (which is a component of Carlson Survey) I'd go with that. Dive in. Start with a simple block traverse and build up.

Kent McMillan, post: 432200, member: 3 wrote: I'll post an example of how the problem of designing an efficient survey...

Greetings Kent:

Did you ever do this? I'm struggling with learning how to get the most out of the "pre-analysis" feature in Star*net and would love to see a practical example.

rfc, post: 434989, member: 8882 wrote: Greetings Kent:

Did you ever do this? I'm struggling with learning how to get the most out of the "pre-analysis" feature in Star*net and would love to see a practical example.

Yes, I did. It is now well off the stern in the wake of the ship. Conrad posted several times to that thread, so searching his posts should reel it in.

https://surveyorconnect.com/community/threads/a-way-to-plan-survey-accuracy.330996/

Kent McMillan, post: 434991, member: 3 wrote: Yes, I did. It is now well off the stern in the wake of the ship. Conrad posted several times to that thread, so searching his posts should reel it in.

https://surveyorconnect.com/community/threads/a-way-to-plan-survey-accuracy.330996/

Thank you Sir! I missed that one entirely. Must have been in the woods too long.

I'm using GNSS at Mountain Home State Forest. I have 8 receivers running simultaneously which takes all of my tripods and two of the other crew's tripods. That's 3 R4 model 3 receivers, 3 R8s receivers, 2 Topcon GR3 receivers. It's taking 6 hour sessions to get something reasonably accurate (and fixed). The other crew is running the boundary traverse (he volunteered). I get 8 going then we go set more control pairs; the task is to get the positions of controlling monuments (all needed have been found) and avoid having to traverse on roads with logging trucks and taxpayer visitors in their cars. It's working fairly well.

The interesting thing is it looks more open than the Mendocino County (coastal) forest work but it is more difficult getting fixed vectors, maybe something in those huge trees. With 8 receivers and multi-hour sessions I get about 2/3rds of the vectors fixed. To go a mile I need at least 3 hours, to go a mile and a half I need 6+ hours.

StarNet makes putting it all together relatively easy. I could set it up in TBC with a lot more effort but in a hotel room at night it's easier to use StarNet. The statistics are better organized with the 95% error ellipses near the bottom. I'm doing the baseline processing in TBC which mostly consists of playing with processing masks but I'm finding leave it at 15?ø and process everything because it seems to work better processing a pile of baselines at the same time than trying to do them one at a time.

I'm surprised nobody has mentioned it...Another way to improve survey accuracy would be to build a wall, on the Oklahoma side of Texas.... Just sayin!

🙂