Frankly, Kent is dumbfounded that anyone would seriously consider using remote CORS sites when there is an excellent CORS site with low network uncertainty and a long time series available within 10km. It's pretty much a no-brainer what will give the best results for a bunch of control points in urban clutter.

Norman Oklahoma, post: 348379, member: 9981 wrote: Your network is minimally constrained relative to the NSRS - the CORS. It is not minimally constrained internally, of course.

That isn't exactly relevant to the objective, though. The orientation of the vectors from the CORS site under 10km distant is independently determined (absent a gross error in the position of the CORS antenna) from the satellite ephemerides. The repeatability of vectors on two days demonstrates the absence of some strange transient condition. The relationship of the network to the CORS antenna is highly overdetermined.

What the objection boils down to is whether the coordinates of the CORS antenna are so good as to be considered to be error-free for practical purposes. One can easily check the CORS antenna coordinates, so the idea that they might be wrong is difficult to take seriously.

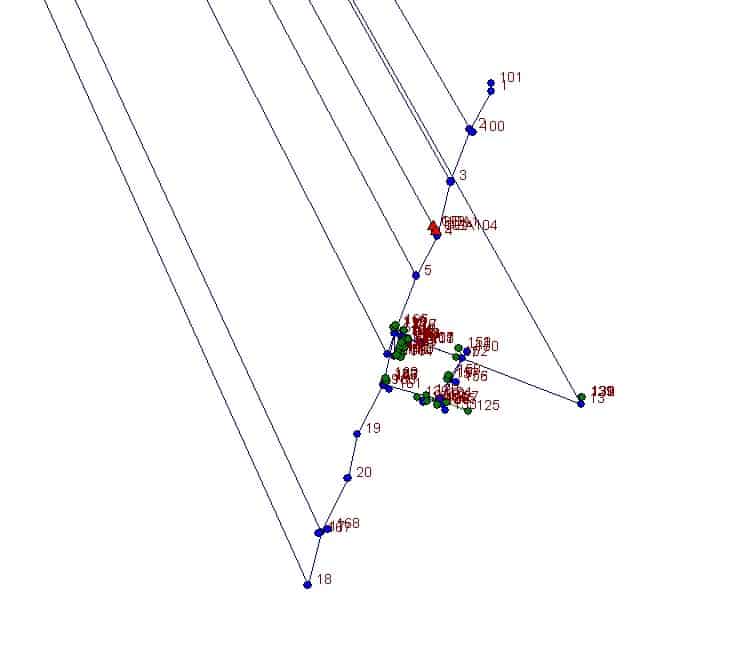

Kent McMillan, post: 348376, member: 3 wrote: I'm sorry, but if you consider a network with as much redundancy as exists in the one I posted as "minimlally constrained" you're using words to mean whatever you want them to mean. The network that I posted is a redundant framework of conventional measurements that gave excellent measurements of the figure formed by the series of control points indicated that were positioned via GPS vectors, three of which were repeated on two different days. The orientation of the conventional network was derived from the adjustment with the GPS vectors weighted by their uncertainties.

What seems to be giving you fellers problems is the idea of using GPS vectors from a single CORS site to determine the positions of things. Apparently, the superstition is that somehow a CORS antenna could just be anywhere and the only way to know for certain is to check it against some other CORS antenna. Considering that this is done daily by NGS in monitoring the National CORS network (of which the CORS site I used is a part), that premise doesn't stand scrutiny well. The other idea was that somehow different CORS antennas would have different satellites in view and that would greatly improve the results. The CORS antenna I used produced clean data from all of the satellites in view, which isn't exactly surprising for a major CORS site.

No need to apologize, Kent. I acknowledge that the 'network' is redundant with both GNSS and terrestrial data, and that each data set enhances the other.

Free adjustment and minimally constrained adjustment are commonly known and well published concepts. There's even a 'feller' from Texas that teaches and writes about it:

"Internal accuracy estimates made relative to a

single fixed point are obtained when so-called free,

unconstrained, or minimally constrained adjustments are

performed. In the case of a single loop, no redundant

observations (or alternate loops) back to the fixed point

are available. When a series of GPS baseline loops (or

network) are observed, then the various paths back to the

single fixed point provide multiple position computations,

allowing for a statistical analysis of the internal accuracy

of not only the position closure but also the relative accuracies

of the individual points in the network (including

relative distance and azimuth accuracy estimates between

these points). The magnitude of these internal relative

accuracy estimates (on a free adjustment) determines the

adequacy of the control for subsequent design, construction,

and mapping work."

"GPS-performed surveys are usually adjusted and analyzed

relative to their internal consistency and external fit with

existing control. The internal consistency adjustment (i.e.,

free or minimally constrained adjustment) is important

from a contract compliance standpoint. A contractorÛªs

performance should be evaluated relative to this adjustment.

The final, or constrained, adjustment fits the GPS

survey to the existing network. This is not always easily

accomplished since existing networks often have lower

relative accuracies than the GPS observations being fit.

Evaluation of a surveyÛªs adequacy should not be based

solely on the results of a constrained adjustment."

"The internal ÛÏfreeÛ geometric adjustment provides

adjusted positions relative to a single, often arbitrary,

fixed point. Most surveys (conventional or GPS) are connected

between existing stations on some predefined reference

network or datum. These fixed stations may be

existing project control points (on NAD 27--SPCS 27) or

stations on the NGRS (NAD 83). In OCONUS locales,

other local or regional reference systems may be used. A

constrained adjustment is the process used to best fit the

survey observations to the established reference system."

Kindly elucidate on the seven parameter Helmert transformation and its accuracy and complete-ness in a network such as published above. I am trying to understand the efficacy of this method. It appears to work, but is it properly fixed, transformed, and projected?

The fact that your measurements from the CORS are highly precise and repeatable doesn't change the fact that your network is minimally constrained - with respect to the NSRS. Re-measuring a line provides blunder detection. Weighted averages of multiple measurements of a line improves the precision of the measurement. But that is not the same thing as constraining an adjustment.

Which is totally okay, IMO. What you are doing here is precisely what I do on a great many of my projects (except that I am usually using RTK vectors). The redundancy of the several vectors tied together by the terrestrial measurements provides all the blunder detection one could ask for and very good relative accuracy from an internally constrained network. I'm much more concerned with the internal precision than network accuracy. In my part of the world the network is literally a moving target anyway.

In one sense your network is constrained. In another it is not.

I think what I find so incredibly exasperating about this discussion is that it should be obvious that the network design I used also gives connections to NAD83 such that the horizontal components of the network uncertainties of the control points are not much worse than those of the CORS antenna.

To suggest that the fact that the network relies entirely upon the position of the CORS antenna doesn't strike me as much of an objection since the correctness of that position can be independently checked, as I have mentioned.

So, to object that some manual of Step-1-2-3- procedures requires ties to some other (inferior) remote CORS antenna doesn't seem very thoughtful.

Yes, I agree, and you agree with me, that's not what you are saying

Norman Oklahoma, post: 348406, member: 9981 wrote: The fact that your measurements from the CORS are highly precise and repeatable doesn't change the fact that your network is minimally constrained - with respect to the NSRS.

Yes, but I trust you see the point that worrying about connecting that particular network to some other CORS site of inferior quality is formalism without purpose. The only question of real significant is whether the CORS antenna coordinates were accurate that were used as the basis of deriving NAD83 positions in the network. That is a question that can be answered.

As vector lengths grow well beyond 10km, obviously other considerations are present, but this isn't that case.

No need for exasperation either, my friend. Discussion.

We can (hopefully) agree on a few commonly accepted principles:

1 no measurement is perfect or accurate, the coordinates of control stations are based on previous measurements, therefore the coordinates of any given CORS station might not be perfect.

2 the GNSS signals received by a CORS station are usually very good quality, but never perfect. this could affect the on site 'seed coordinates' of post processed GPS control stations.

keep using your methods, partial adjustments might be all that is necessary.

Kent McMillan, post: 348410, member: 3 wrote: ..... connecting that particular network to some other CORS site of inferior quality is formalism without purpose....

We agree on that.

Moe Shetty, post: 348411, member: 138 wrote:

We can (hopefully) agree on a few commonly accepted principles:1 no measurement is perfect or accurate, the coordinates of control stations are based on previous measurements, therefore the coordinates of any given CORS station might not be perfect.

That ignores the practical fact that certain CORS sites are as close to being error-free realizations of NAD83 as can be had.

2 the GNSS signals received by a CORS station are usually very good quality, but never perfect. this could affect the on site 'seed coordinates' of post processed GPS control stations.

Except the coordinates of CORS antennas are well known. Their uncertainties do not produce an uncertainty of any significance in the components of post-processed vectors 10km in length.

i must say i'm a bit thrown by this discussion. aside from having derived his relationship with the grid coordinate system (NAD83?) from just TXAU i'm not seeing much 'wrong' with what kent's done. and if Texas' CORS stations are anything like those here then the health of these are constantly monitored. unless he's using observations from TXAU during/after an earthquake then he's brought coordinates to his project that will be as good as it's going to get. is the contention that TXAU could have moved before/during/after the project?

with respect to processing strategies/satellites, AUSPOS was tested by some publication recently as the best positioning service if i remember correctly. well from the discussions i've had with the head guys there they're changing, or have changed, their processing strategy (again). so if one were to make an appeal to (their) authority, for example, then one would want to date stamp one's appeal! did they get it wrong first time around? they used to select the CORS station to process your data with based on the number of double difference solutions formed. so if the maximum number of DD solutions was formed with a station 100km away, and you had 3 closer ones you'd still get processed from 100km away. now i'm told they are moving to a nearest station processing strategy. the reports returned to myself recently suggest this may have happened already. i'll have to call them to find out. what does this say about common satellites/proximity/atmospherics and the wisdom of the strategy they chose before?

as for his local network, it seems overdetermined to me with respect to position and orientation in NAD83. all he'd have needed would be one E&N, and another E or N for a minimal fit to NAD83. but as it is he's well overdetermined. the only thing i'd have done differently, and only if it was available, would have been to sight off to a trig station. throw this in for a precise determination of azimuth then his TPS work is essentially translating (not rotating much) to a best fit within the positional uncertainties of his GPS (NAD83) points. i say this is essentially (not exactly) what i understand will happen as the precision of his GPS vectors will have little effect on his relatively precise TPS work.

i'm sorry if i've misunderstood the objections to his work. it all seems above board to me.

Conrad, post: 348579, member: 6642 wrote: i must say i'm a bit thrown by this discussion. aside from having derived his relationship with the grid coordinate system (NAD83?) from just TXAU i'm not seeing much 'wrong' with what kent's done. and if Texas' CORS stations are anything like those here then the health of these are constantly monitored.

Yes, TXAU is a major CORS site that is both monitored daily and is a part of the IGS network. Just wanting to tie to other CORS sites purely for the sake of form is what I call "formalism". It really wouldn't make any sense in the example posted.

As for his local network, it seems overdetermined to me with respect to position and orientation in NAD83. all he'd have needed would be one E&N, and another E or N for a minimal fit to NAD83. but as it is he's well overdetermined. the only thing i'd have done differently, and only if it was available, would have been to sight off to a trig station.

As it turned out, I actually extended the network further South to make the tie to the Model T Ford Axle found marking a property corner that appeared in some historic survey records. It improved the uncertainty in orientation of the network and dropped the azimuth uncertainties of the lines between control points to around +/- 3 seconds (at 95% confidence).

Kent McMillan, post: 348580, member: 3 wrote: As it turned out, I actually extended the network further South to make the tie to the Model T Ford Axle found marking a property corner that appeared in some historic survey records. It improved the uncertainty in orientation of the network and dropped the azimuth uncertainties of the lines between control points to around +/- 3 seconds (at 95% confidence).

ok, well i give you a pass. i see you're going for extra credits in your latest topic.

Mark Mayer, post: 347882, member: 424 wrote: Could you elaborate on that? I've seen some "minimum standards" and a few "suggested procedures" but never anything that rises to "rules for", and I'd very much like to.

How about guidelines? NOS NGS-58 and 59 have served me well (though I generally use longer sessions than recommended).

One of the things those guidelines specify is fixed-height tripods -- no messing with taped HIs or tribrach adjustments. I highly recommend the kind with a rotatable center pole so that a bubble check can quickly be done at every setup.

With the exception of RTK, I can count on one hand the times I've mounted an antenna on anything but a 2-meter tripod in the last 15 years.

Kent McMillan, post: 348054, member: 3 wrote: My suspicion would be that you are adjusting two highly correlated vectors to a common point as if they are actually independent vectors. Of course they aren't if they both use exactly the same observations at the rover/unknown point, but if you treat them as such it will make the results appear to be better than they are.

If one is going to do that, the very best way of testing the reality of the results is simply by connecting them by high-quality conventional measurements and adjusting a traverse run through the GPS points, using the supposed uncertainties in the GPS vectors to weight the GPS points as conditions in the adjustment.

My money will be on the GPS points turning out to be not as excellent as believed from just the statistics of the GPS-only adjustment.

What would you consider to be a highly correlated vector?

Kent McMillan, post: 348044, member: 3 wrote: Okay, there clearly is a disconnect in this discussion if so many posters are unaware of the possibility of using conventional measurements between control points positioned by GPS as a condition to improve the accuracy of the GPS-derived positions. ...The conventional measurements provided a very good check on the GPS vectors as well as a condition by which they were adjusted.

I think we are all pretty clear on that. Personally, I am surprised that you know the previous, but see no value to multiple baselines.

dmyhill, post: 348860, member: 1137 wrote: What would you consider to be a highly correlated vector?

Two vectors using the same observations would he correlated since they have the same rover observations in common and those observations would be subject to the identical effects at the rover such as multipath environment. In other words, one would expect that an error present in one vector from some cause originating in the rover observations would also be present in the other vector.

dmyhill, post: 348861, member: 1137 wrote: I think we are all pretty clear on that. Personally, I am surprised that you know the previous, but see no value to multiple baselines.

That was pretty much the point of this demonstration, that the problem is not one of polishing up every GPS-derived position in a network if they are connected by high-quality conventional measurements. The improvement comes from the adjustment of GPS vectors and conventional meaurements simultaneously.

Kent McMillan, post: 348863, member: 3 wrote: Two vectors using the same observations would he correlated since they have the same rover observations in common and those observations would be subject to the identical effects at the rover such as multipath environment. In other words, one would expect that an error present in one vector from some cause originating in the rover observations would also be present in the other vector.

I see. I would expect the multipath enviroment to be different with typical spacing of control points. The geometry of the satellites would be the same, but there are so many available that I am not sure if that is much of a factor. I suppose you could turn off different ones for each solution...

Part of what you are talking about is whether you dumped it all into processing software, pushed the button, and let it magically give you results. I am not advocating that, and if that is happening, more is not always going to be better.

Again, the network RTK is so good that I rarely bother, but if I need the static, it will indicate (to me) that multiple baselines is warranted. It may not be necessary, but I cannot imagine a reason NOT to.

Those differences aside, your example is very good at demonstrating why conventional traverse techniques + GNSS + post-analysis should be the gold standard for use of GPS to "air drop" control into an area. All the guys that place a couple of points at each end of a route, traverse, and wonder why it "misses", they would do well to check out your techniques.