This is an academic exercise, so for the purposes of the question, eliminate any commercial issues (like the time and cost it takes to run redundant traverses to improve a control network).

Say you run three pretty much identical traverses; weed out outliers, and bring them into Star*net, Say two are really tight; the third, not so much. Mathematically, does it just make sense to not include it at all, if it raises the Error Factor at all? Is the Chi Square Test the "Be all and End all" of how good the network is? Is it really just as simple as including what brings the Error Factor down and dump any measurements that raise it?

The math behind least squares assumes a bunch of things, which are (more than?) satisfied if the errors are all Gaussian normal unbiased with known standard deviations.

So if you correctly weed out all the blunders and systematic errors, and can reasonably estimate the standard error for each measurement, then all those remaining measurements add information to the fitting process. The higher the standard error, the less information they add, but throwing out too many reduces your accuracy.

If you keep throwing out measurements just because they don't fit as well as others, and lower your std errors accordingly, you can prune it down to get a false sense of security, and a less-accurate result despite passing chi-squared with a report of 1.000 or less.

So the trick is to always correctly identify a blunder and to correctly assign standard errors. Since the true error may vary with how cold your fingers are and how tired your eyes are during the measurement, or in how much of a hurry you are to get to supper, the same standard error may not apply to all of those traverses.

Weed out? Not include? Dump? Unless it's a blunder, put everything "into the soup", and assign realistic standard errors. If you used identical procedures on your 3 sample traverses they'd all go in with the same standard errors. Weeding out "outliers" that might be bad defeats the purpose. Weed out, or apply larger standard errors to only those measurements that you are certain are not reliable, or as a test in a problem network. You should not be adjusting standard errors for acceptable measurements to make your results look better. Assign realistic standard errors and leave 'em alone. (says the guy who hasn't used StarNEt in quite awhile). Good luck.

BajaOR, post: 350326, member: 9139 wrote: Weed out? Not include? Dump? Unless it's a blunder, put everything "into the soup", and assign realistic standard errors. If you used identical procedures on your 3 sample traverses they'd all go in with the same standard errors. Weeding out "outliers" that might be bad defeats the purpose. Weed out, or apply larger standard errors to only those measurements that you are certain are not reliable, or as a test in a problem network. You should not be adjusting standard errors for acceptable measurements to make your results look better. Assign realistic standard errors and leave 'em alone. (says the guy who hasn't used StarNEt in quite awhile). Good luck.

Ok. That helps (and is not unlike Bill93's advice). I haven't spent much time including specific standard errors for individual measurements, but Star*net certainly makes it easy enough to do. Rather than dump an entire traverse, I'll try increasing the standard errors for individual measurements when I smell a fish, and see what happens.

Thanks

The goal is not in and of itself to obtain a scalar of 1.00. Strive to use the tool properly. Properly identify and weigh a priori error assumptions and then compare those assumptions to the a posteriori measurement data. The results of this process allows for the location of blunders and the application of systematic error to the measurements. One expects different errors based upon methodology and equipment. Given the same method and equipment, one expects the errors to be the same. Some times there are measurements that are outliers based upon our assumptions. Other times the outliers are based upon some function of the actual data collection. Care needs to be taken that problematic measurements do not exert undue influence on the adjustment.

At one time I worked with two different crews. One crew's work regularly passed the the Chi test, with no manipulation by measured data or changes to the a priori error assumption. The second crew's work would never pass without extensive intervention. I would never consider allowing inferior measurement data to distort the adjustment.

DANEMINCE@YAHOO.COM, post: 350678, member: 296 wrote: I would never consider allowing inferior measurement data to distort the adjustment.

😀

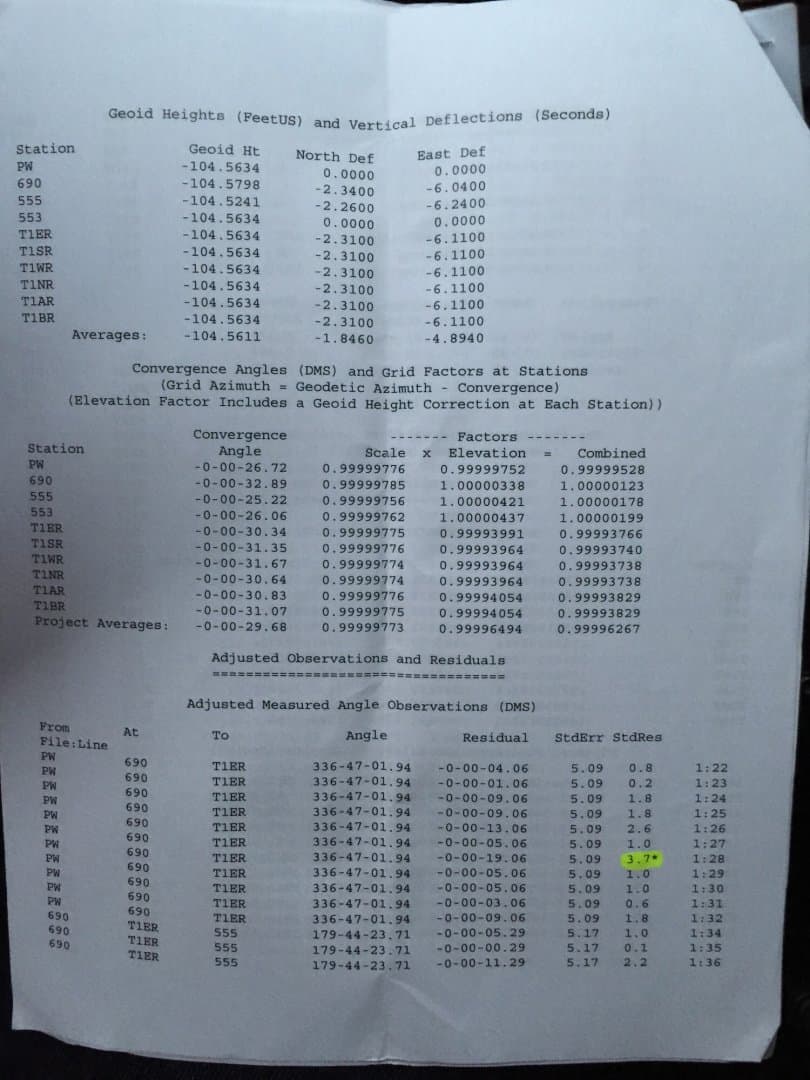

Your post made my day. As crew chief, rod man, I man, and Cad guy, I'm dealing with "inferior measurement data" all the time, lol. But your thoughts make a lot of sense. I've used Scott Z's technique of eliminating individual suspect observations (rather than dumping the entire traverse), and have moved all my standard errors back to what I know them (or am pretty sure) to be. With 113 observations between 10 stations, in 9 roughly triangular, interconnected traverses, I'm about half way done with the network so far. My combined error factor is 1.1, near the upper limit of Chi Square for these observations (1.147), which is fine by me for now. I know what I have to work on (Uhhh, like recording measure ups properly). As they say, Rome wasn't built in a day.

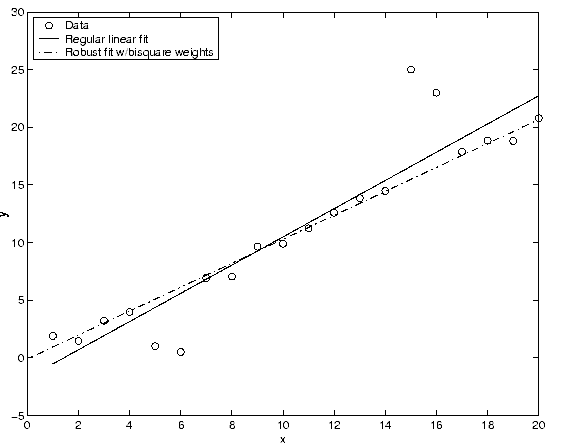

A good supplement to least squares is robust least squares where the sum of the absolute residuals is minimised in the network rather than the sum of the squared residuals. It's like using the median observations for the network solution rather than the average, and it is not affected by blunders or outliers unlike least squares which will be biased towards them. I was using median (which is a robust estimator) in my total station testing sometimes rather than the average.

Conrad, post: 350697, member: 6642 wrote: A good supplement to least squares is robust least squares where the sum of the absolute residuals is minimised in the network rather than the sum of the squared residuals. It's like using the median observations for the network solution rather than the average, and it is not affected by blunders or outliers unlike least squares which will be biased towards them. I was using median (which is a robust estimator) in my total station testing sometimes rather than the average.

I can't say I would begin to know how to apply that analysis (within the context of using Star*net for my LSA adjustment, but I think I get what robust least squares is doing. I found an example from Mathworks:

Because it ignores the outliers, it becomes an alternative to weeding them out. So far, that hasn't been a huge challenge, because for the most part they're obvious, but I'm going to read more about it in any case.

rfc, post: 350702, member: 8882 wrote: I can't say I would begin to know how to apply that analysis (within the context of using Star*net for my LSA adjustment, but I think I get what robust least squares is doing. I found an example from Mathworks:

Because it ignores the outliers, it becomes an alternative to weeding them out. So far, that hasn't been a huge challenge, because for the most part they're obvious, but I'm going to read more about it in any case.

I believe we were taught a hybrid method called the Danish weighting method where the v/s was examined and suspect obs over about 2.5 were successively down weighted each adjustment and the solution checked before going again. It was a way of bringing the protection against outliers of the robust solution into the least squares solution.

Having said that, sometimes if most of your obs are sufficient quality and you have good redundancy then even the difference between solutions using different weightings and the difference between 'good' and 'average' vf test results can mean only a couple of mm the final adjusted measurements. You can lose sight of how little practical difference you are making. When I'm near the end and I'm playing with weightings I like to re-input my last coords as the initial coords in the next adjustment. If I only see fractions of a mm in the next run then I should leave it alone.

I found this paper that discusses the Danish Method. It seems their work is trying to identify times when the error is NOT gaussian, specifically here when GPS is affected by multipath, and use this decision to reduce the impact of the errors.

https://www.terrapub.co.jp/journals/EPS/pdf/5210/52100777.pdf

So I can see how that may be beneficial if there is some characteristic of the data that can be used as a reasonably reliable indicator of when conditions are different, particularly in a time series like the GPS data.

It isn't immediately clear to me how much that should apply to outliers in angle and distance measurements. However, these folks seem to be doing something more like that, so I need to do some more reading.

http://gse.vsb.cz/2011/LVII-2011-3-14-29.pdf