Hi

I am trying out starnet for the first time and i wondered if anyone could comment on my project.

Please don't mock I have an old 3" total station (out of calibration) ! But i would like suggestions on how to improve accuracy.

The fixed control points are that of a professional firm, that has also made least squares adjustments.

They used a modern top of the range 1 second robotic instrument.

But i am making the assumption they are exact.

I have attached a text file that should drop into star-net. I have not added any GNSS vectors yet, but the coordinates system is OSGB . The purpose was to setup control within a car park so i can start merging several topos i have. The site is quite difficult as it is surrounded by a palisade fence so getting line of sight was not possible from all stations. (attached google earth is good position)

Thank you

William

I'm willing to have a go, but need to know what the average scale factor is that pertains to OSGB for this site. I'm using Star*Net-Pro 6.0, which is an older version without the international projections in the library. So, the work around would be to adjust it as a local coordinate system using the scale factor, which should be a perfectly fine approach for a car-park-sized project extent.

Thank you Kent

You might be horrified by the inaccuracy of my surveying!

I still have not quite got my head completely around scale factors.

Starnet tells me # Project Average Combined Factor of 0.9996955274

I have attached a file showing the scale factors for the professional survey.

William

wilba, post: 376733, member: 9024 wrote: I still have not quite got my head completely around scale factors. Starnet tells me # Project Average Combined Factor of 0.9996955274

Hmm. The other surveyor's listing basically gives a CSF = 0.999699 (to round things off to the nearest 1ppm, which should be perfectly good enough for a project under 1km in size). I wonder how Star*Net is generating a different CSF. Granted, the difference isn't much, but it is still a discrepancy that I'd want to look into in case there is more to it.

I suppose that I could look this up, but is OSGB a projection onto an ellipsoid as one would assume and does the average height difference between the leveling datum and the ellipsoid amount to something more than just a trivial distance?

As far as i understand it...

We have a national grid OSGB36 with Newlyn Datum

To convert between GNNS observations we use OSTN02 and OSGM02 (geoid)

The OS have an online conversion

https://www.ordnancesurvey.co.uk/gps/transformation

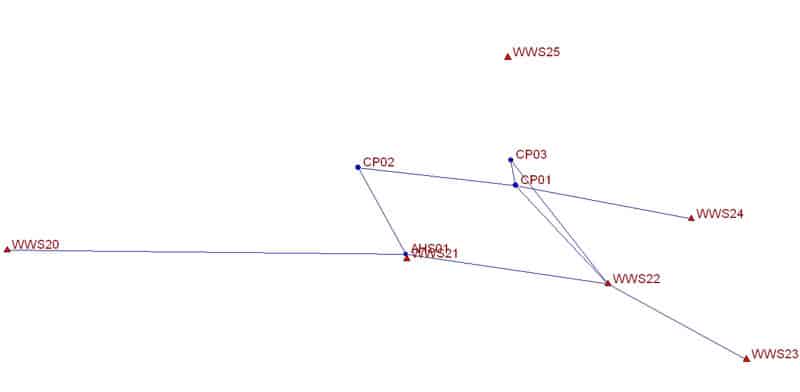

Okay, so is this pretty much what your network looks like?

If so, it would be a good idea to move the E,N coordinate order inline command to the top of the input file:

.ORDER EN

This would mainly be for the benefit of other users who standardly use a N,E,Z format for coordinates.

- Ditto on Kent's remarks. In order to do this up really right we would need to know which grid zone you are working in. But we can proceed based on a local, unscaled adjustment.

- Once I figured out that the coordinates were ENZ order instead of the NEZ format we commonly use in the US things went together quickly. Put that ".order EN" command in above the coordinates.

- If you use a ".dat" extension on your data file and view it in StarNet you commented lines will be green and inline command red. That makes editing easier. You lose that when you use .txt as the extension.

- After giving the coordinates a small standard error (0.01 0.01 0.01) your data adjusts (local) with a total error factor of about 1.5. That would likely be close to 1.0 if I were properly accounting for the grid. If I hold the control coordinates fixed (! ! ! ) the error factor jumps to over 2, and the distance ASH01-WWS20 shows a residual of 0.05m. This suggests to me that the coordinate of WWS20 is less than perfect.

- In short it looks like your data is free of blunder.

My first thought is that assuming that the other surveyor's coordinates are absolutely free of any error is probably not a warranted assumption. So it would be more realistic to assign some standard errors to all of them, or so I'd think. That, however, would be most easily done if you could get the coordinate uncertainties from the surveyor. If his or her control network was adjusted by least squares, they should have formal estimates of the coordinate uncertainties, both in relation to each other and in relation to OSGB datum. The relative uncertainties are what I'd think would be most useful for your work, mainly just to get a reasonable estimate of some values to assign to the control coordinates you are basing your work upon. That is, are their relative standard errors +/-1mm, +/-5mm, or +/-1cm?

I worked with StarNet for several years before I gained the confidence to assign small standard errors to all the control coordinates. After all, when you do that StarNet issues a warning message with exclamation points. That's enough to put less stout hearts off. But it makes perfect sense because any control coordinate is going to be imperfect.

I started doing it in conjunction with having "Coordinate changes from entered provisionals" turned on in the report. Any bogus coordinates will show up as having changes. I would then free those up and rerun with the others fixed. But more and more I leave the small standard errors in if project management issues can bear it.

Using the "OrdinalSurvey" zone in Starnet yields a passing error factor and lats/longs (and kml file in v8.1) that are in the general vicinity of the OPs screen capture, but roughly 75 meters south.

Mark Mayer, post: 376750, member: 424 wrote: Using the "OrdinalSurvey" zone in Starnet yields a passing error factor and lats/longs (and kml file in v8.1) that are in the general vicinity of the OPs screen capture, but roughly 75 meters south.

That is a bit concerning. I wonder if the control coordinates are actually OSGB or have been scaled.

Not sure what Ordinal Survey zone is but the current (and final apparently) transformation from lat/long to OSGB36 coords uses a shift grid - OSTN02.

If this is not used then a 75m error would be typical.

Kent McMillan, post: 376743, member: 3 wrote: Okay, so is this pretty much what your network looks like?

If so, it would be a good idea to move the E,N coordinate order inline command to the top of the input file:

.ORDER EN

This would mainly be for the benefit of other users who standardly use a N,E,Z format for coordinates.

Yes network is like that.

.ORDER EN moved to top

I found the inline option after setting the project options.

wilba, post: 376768, member: 9024 wrote: Yes network is like that.

.ORDER EN moved to top

I found the inline option after setting the project options.

I suspect that ENZ coordinate order is probably standard in the UK, but the inline setting makes the input file a bit easier for the US Star*Netizens to run who may unconsciously expect NEZ (as I did until I noticed the inline command following the control coordinate list).

wilba, post: 376719, member: 9024 wrote: Please don't mock I have an old 3" total station (out of calibration) ! But i would like suggestions on how to improve accuracy.

I don't see anything necessarily wrong with using an older total station if it is functioning well, but one thing that is always important for best results in Star*Net is to use realistic estimates of the standard errors of angles and distances measured. The manufacturer's spec for the total station would be a reasonable place to begin. What make and model is the instrument?

The other, equally important element is centering method for instrument and prisms/targets. Is the instrument centered using:

- optical plummet in tribrach,

- optical plummet in instrument alidade (rotates with instrument),

- laser plummet in tribrach, or

- laser plummet in instrument alidade?

If the plummet doesn't rotate, it's important to verify that it will center over a ground mark within some tolerance. If it does rotate, that fact can be checked by simply rotating the instrument and checking centering in opposite orientations (rotated 180å¡).

The method by which targets and prisms are centered over control points is probably more critical to survey accuracy when someone other than the surveyor is responsible for doing so. Centering accuracy is a relatively easy thing to test to get a realistic value of the standard error of target centering to use in Star*Net.

Mark Mayer, post: 376744, member: 424 wrote:

- Ditto on Kent's remarks. In order to do this up really right we would need to know which grid zone you are working in. But we can proceed based on a local, unscaled adjustment.

- Once I figured out that the coordinates were ENZ order instead of the NEZ format we commonly use in the US things went together quickly. Put that ".order EN" command in above the coordinates.

- If you use a ".dat" extension on your data file and view it in StarNet you commented lines will be green and inline command red. That makes editing easier. You lose that when you use .txt as the extension.

- After giving the coordinates a small standard error (0.01 0.01 0.01) your data adjusts (local) with a total error factor of about 1.5. That would likely be close to 1.0 if I were properly accounting for the grid. If I hold the control coordinates fixed (! ! ! ) the error factor jumps to over 2, and the distance ASH01-WWS20 shows a residual of 0.05m. This suggests to me that the coordinate of WWS20 is less than perfect.

- In short it looks like your data is free of blunder.

- OK yes, I am sure accuracy wise I am not the realm of it having much effect?

- Done.

- Just renamed the file so i could upload, would be nice if there was syntax highlighting for star*net files on the website.:-)

- I think this probably most sensible. I had to start somewhere with starnet. However on top of the 'initial survey error' there has been a lot of tree clearance since the survey and track machines have rumbled up and down this tarmac footpath (also ripping out out WWS21:-@) (this would have been perfect) and there is cracking of the path and maybe spreading, i don't know. Control WWS23 looks the most solid in a concrete outfall. WWS20 is in similar tarmac. I did advise the organisation who commissioned the survey to install solid monuments out of the way (I know what a nuisance it is when they are destroyed).

Mark Mayer, post: 376750, member: 424 wrote: Using the "OrdinalSurvey" zone in Starnet yields a passing error factor and lats/longs (and kml file in v8.1) that are in the general vicinity of the OPs screen capture, but roughly 75 meters south.

I don't know of OrdinalSurvey. The professional survey specified "OSGB36 with Newlyn Datum"

Mark Mayer, post: 376749, member: 424 wrote: I worked with StarNet for several years before I gained the confidence to assign small standard errors to all the control coordinates. After all, when you do that StarNet issues a warning message with exclamation points. That's enough to put less stout hearts off. But it makes perfect sense because any control coordinate is going to be imperfect.

I started doing it in conjunction with having "Coordinate changes from entered provisionals" turned on in the report. Any bogus coordinates will show up as having changes. I would then free those up and rerun with the others fixed. But more and more I leave the small standard errors in if project management issues can bear it.

Thanks. I will look into "Coordinate changes from entered provisionals".

wilba, post: 376772, member: 9024 wrote: Thanks. I will look into "Coordinate changes from entered provisionals".

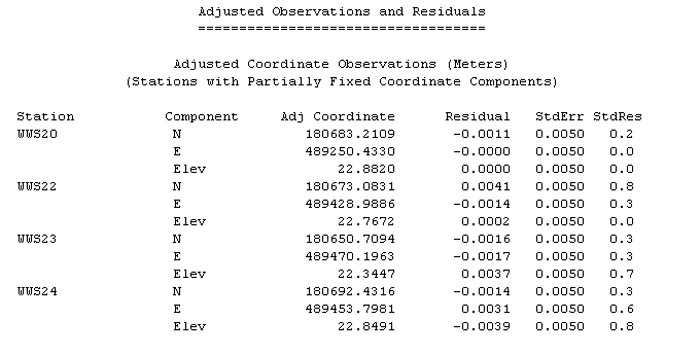

In Star*Net-Pro Ver. 6, if you assign standard errors to control coordinates, you get a listing of residuals that looks something like this:

The above were generated by running an adjustment with 5mm arbitrarily assigned to each of the the ENZ components and assigning best guess estimates of standard errors of instrument and target centering, angles, distances, et cet. If you start out with more relaxed (i.e. larger) standard errors on the coordinates, you can just inspect the residuals to see which control points may have shifted or for which the control values seem otherwise problematically in consistent with the rest of the control.

Kent McMillan, post: 376774, member: 3 wrote: [SNIP] If you start out with more relaxed (i.e. larger) standard errors on the coordinates, you can just inspect the residuals to see which control points may have shifted or for which the control values seem otherwise problematically in consistent with the rest of the control. [/SNIP]

Excellent advice IMO.

There was one problematic control point in my most recent project where fixing the coord's blew it up; assigning a relatively loose standard error caused it to throw a warning at the lower end, which I didn't sweat as I know my assigned instrument and centering errors are reasonable. Looking at the residuals for this one point tells me something is amiss; now I just have to repair it.

wilba, post: 376719, member: 9024 wrote: Please don't mock I have an old 3" total station (out of calibration) ! But i would like suggestions on how to improve accuracy.

Another opportunity to improve the performance of any TS, new or old, is to enter the station pressure and temperature to the EDM. Note that the pressure that some weather website on your phone reports is corrected to sea level. You need a barometer to read the pressure at your location. Temperature +/-5å¡C is close enough for most situations.

Mark Mayer, post: 376794, member: 424 wrote: Another opportunity to improve the performance of any TS, new or old, is to enter the station pressure and temperature to the EDM. Note that the pressure that some weather website on your phone reports is corrected to sea level. You need a barometer to read the pressure at your location. Temperature +/-5å¡C is close enough for most situations.

Although for relatively short distances, verifying that the instrument constant is correctly set is probably the most important element to accuracy with a total station. That is, it doesn't take too many distances that are systematically long by 30mm to really blow everything up. Long by 2mm is more pernicious since it is less obvious, but is still discoverable.